A useful feature that was introduced not too long ago for Windows 10 has now been disabled. The RemoteFX vGPU feature lets the users of Virtual Machines (VMs) running Windows Server 2008 R2, Server 2012, Server 2016, and Windows 10 running on Hyper-V use the physical Graphics Processing Unit (GPU) of the host machine to render its graphic output.

As of the most recent update by Microsoft for Windows 10, KB4571757, Microsoft has decided to discontinue this feature due to a critical vulnerability discovered in Remote Code Execution.

Let’s look into the details of the feature, why it was discontinued, and whether or not we can find a way for it to still work.

Table of Contents

What is RemoteFX vGPU?

The VMs running on Hyper-V can use the physical GPU on the host computer to run their tasks concerning video rendering and image processing, using the RemoteFX vGPU feature. This allows users to take the load off of their CPU and run heavy image processing on their VMs using the shared GPU.

With this feature, a dedicated GPU for each VM is not required and simultaneously provides enhanced scalability and usability of the GPU, as well as the VMs. Head on to Microsoft’s web page to learn more about the feature.

Why did Microsoft remove RemoteFX vGPU feature?

Although the RemoteFX vGPU feature is old as it was introduced in Windows 7, it is now being exploited by hackers to execute remote commands on the host machine. This feature is not capable of authenticating the input of a valid user on the VM. A hacker can exploit this vulnerability to run modified applications on the VM to exploit the drivers of the host GPU and gain access. Once they have access to the host machine, they are able to execute remote commands and scripts.

Although Microsoft addresses such vulnerabilities through their regular updates, they were unable to do so as the flaw is architectural.

Microsoft started removing this feature for different versions of the OS back in July 2020. However, the Windows 10 cumulative update for September 2020 disabled the feature in all editions of Windows 10 version 2004.

Microsoft states that users will still be able to re-enable the feature until February 2021 through special commands, but should start working on alternative methods which will be discussed further down the article. Here is a notification by Microsoft on disabling RemoteFX vGPU.

How to enable RemoteFX vGPU on Hyper-V running on Windows 10

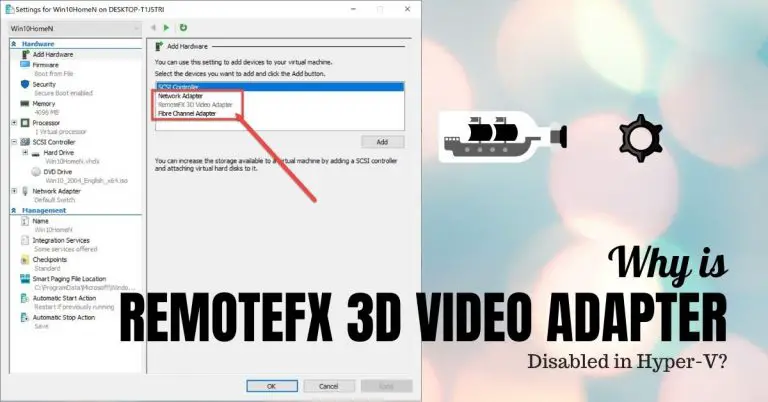

Although Microsoft has greyed out the feature to enable RemoteFX vGPU through Hyper-V on Windows 10, it can still be enabled through a command using PowerShell. Moreover, the Group Policy Settings are still there within the guest Operating Systems (VMs) which can be used to enable the feature on the VM just as before.

- First, turn on the VM you wish to configure this feature on and open the Group Policy Editor by typing in gpedit.msc in Run.

- In the Group Policy Editor, navigate to the following from the left pane:

- Computer Configuration -> Administrative Templates -> Windows Components -> Remote Desktop Services -> Remote Desktop Session Host -> Remote Session Environment -> RemoteFX for Windows Server

- In the right pane, double-click on Configure RemoteFX.

- In the Configure RemoteFX properties window, select Enabled, and then click Apply and Ok.

- Now open the Command Prompt and type in gpupdate /force to update the Group Policies.

- Download and install the recommended GPU driver according to your physical GPU. Here is a detailed review of GPUs and how to manage them.

- Now shut down the VM and navigate back to the hosting computer running Hyper-V.

- Since the RemoteFX 3D Video Adapter option is greyed out in the settings of the VM, we shall enable it through PowerShell. Run PowerShell with Administrative Rights. Here is a complete guide on how to always run PowerShell in Administrative Mode.

- Enter the following command while replacing (name) with the name of your virtual machine:

Add-VMRemoteFX3dVideoAdapter -VMName (name)

- Now open the VM Settings through the Hyper-V control panel and you will find the RemoteFX ED Video Adapter under the Processor tab. Click on it and then make the configurations according to your needs.

You can now start and connect to the virtual machine and it will now use the host machine’s GPU to process its workload. You may also configure multiple VMs to run on a shared physical GPU.

Alternative for RemoteFX vGPU

Microsoft is permanently planning on removing the feature by February 2021. However, they have not left their users stranded. Microsoft has also proposed an alternative solution to directly mount the physical GPU on the PCIe port to a VM through the Discrete Device Assignment (DDA).

This method has three phases which need to be completed in order to provide a VM with a dedicated GPU:

- Configure the VM for DDA

- Dismount the GPU from the host computer

- Assign the GPU to the VM

Configure the VM for DDA

On the host computer, run the following commands one after the other to configure its settings. Replace (name) with the name of the VM:

Set-VM -Name (name) -AutomaticStopAction TurnOffSet-VM -GuestControlledCacheTypes $true -VMName (name)Set-VM -LowMemoryMappedIoSpace 3Gb -VMName (name)Set-VM -HighMemoryMappedIoSpace 33280Mb -VMName (name)

Dismount the GPU from the host computer

Firstly, you need to disable the GPU on the PCIe port, and then dismount it. But before that, you are going to need the port’s physical address. This can be determined by performing the following:

- Head to the Device Manager by typing in devmgmt.msc in Run.

- Now expand Display Adapters and right-click on the GPU. Select Properties from the Context Menu.

- Go to the Details tab and select Location Paths from the drop-down menu under Property.

- Note that the entry starts with “PCIROOT” as this will be required when dismounting the GPU from the host device.

Now perform the following to dismount the device:

- Head to the Properties window of the GPU from the Device Manager.

- Go to the Driver tab and then click on Disable device.

Now that the device has been disabled, you need to dismount it.

Enter the following command in Command Prompt:Dismount-VMHostAssignableDevice -force -LocationPath $(LocationPath)

Replace (LocationPath) with the PCIe path you had noted earlier.

Once done, you may proceed to the next phase.

Assign the GPU to the VM

Now all you need to do is program Hyper-V to let the specified VM use the physical GPU. This can be done by running the command below in Command Prompt:Add-VMAssignableDevice -LocationPath $locationPath -VMName (name)

Replace (name) with the name of the VM.

You may now start the guest operating system and see that the video adapter it is now using will be the physical one on your host computer.

If at any time you wish to return the GPU back to the host device, simply run the following two commands in the same order, one after the other, in the Command Prompt while replacing (name) with the name of the VM:Remove-VMAssignableDevice -LocationPath $locationPath -VMName VMName<br>Mount-VMHostAssignableDevice -LocationPath $locationPath

Closing words

The RemoteFX vGPU hasn’t been around much for the version 2004. However, people liked the idea of it. Seeing it go might not be as troublesome as anticipated unless you put in the cost factor.

Although Microsoft has given an alternative for using designated GPUs for each virtual machine, it would not be ideal to place as many GPUs on the motherboard of the host computer as the virtual machines. The costs would be too high, and power consumption would not be ideal.

Microsoft needs to find a workaround for the issue as the solution provided is not feasible for most users.